UX CASE STUDY

Skyway Helper

Design conceptualization and prototyping of a new wayfinding smartphone app using augmented reality activated by scanning spatially located physical signage.

TL;DR

After observing users having trouble navigating the Minneapolis Skyway system, I created a novel design concept to assist them using an augmented reality smartphone app that syncs with traditional wayfinding signage.

Introduction

Approximately 285,000 pedestrians use the Minneapolis Skyway System (Skyway) every day (pre-COVID-19). A multitude of them get lost in, or are hesitant to explore new areas of the enclosed, elevated walkway system that connects buildings throughout the heart of the city.

To help these users, I developed a Skyway wayfinding app design concept utilizing augmented reality and physical signage that works within constraints imposed by the unique characteristics and limitations of the walkway system.

Problem Space

The Skyway is a collection of elevated, enclosed walkways (and several tunnels) allowing pedestrians to travel between buildings in the downtown area. The system is comprised of unfamiliar looking passages, inconsistent and unclear signage, changes in height, and areas with no windows to the outside world.

Individual sections of the system are built and maintained by private building owners. Comprehensive indoor positioning systems are difficult to implement because of private building owners’ differing levels of interest in the Skyway.

Furthermore, since the system is indoors, GPS is unreliable, reducing the usefulness of existing mapping apps.

Research

The Skyway Helper native smartphone app was conceived by interviewing several new Skyway users about their experiences navigating the Skyway. The users indicated that the two most popular and effective ways to navigate the system were personal directions (verbal) and physical wayfinding signage (spatial). What if the essence of these two methods of navigation were combined into a smartphone app?

After extensive secondary research into various aspects of Augmented Reality (AR), it was determined that AR could be utilized to create an improved wayfinding experience for Skyway users.

Solution Part 1: Physical Signage

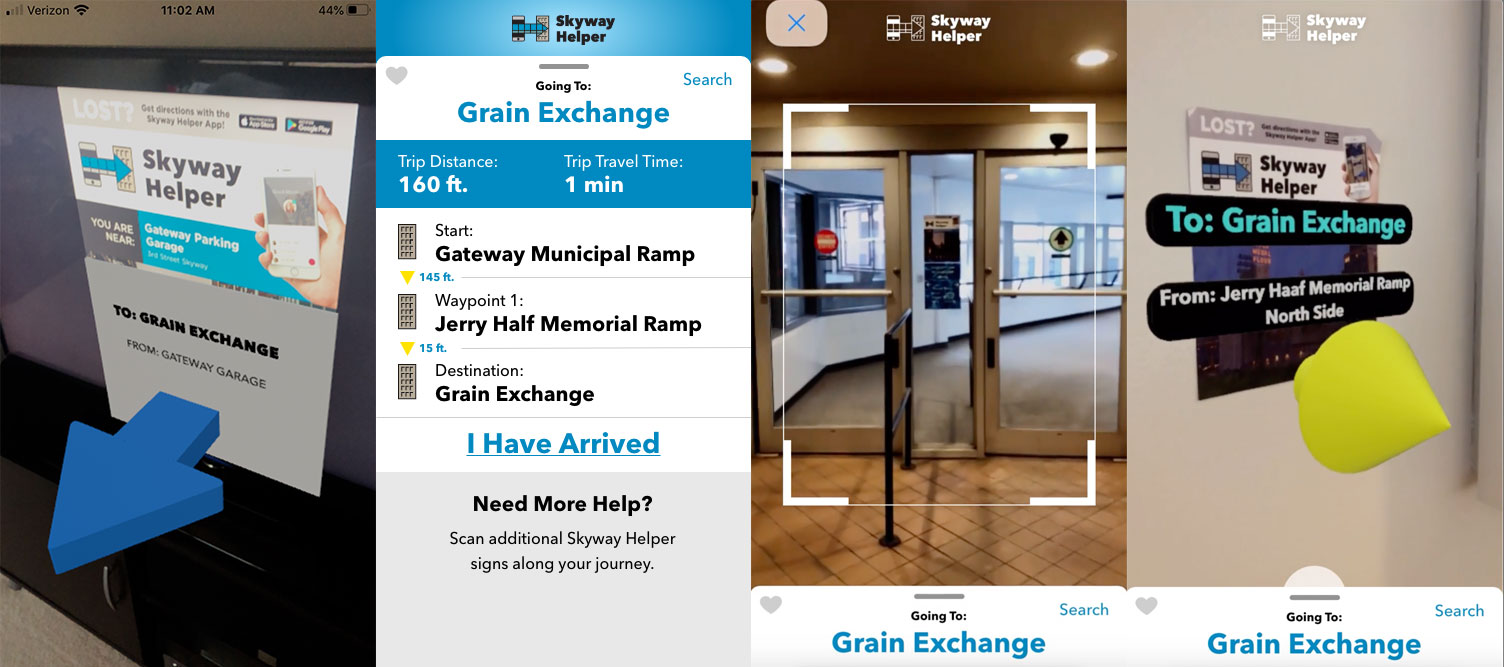

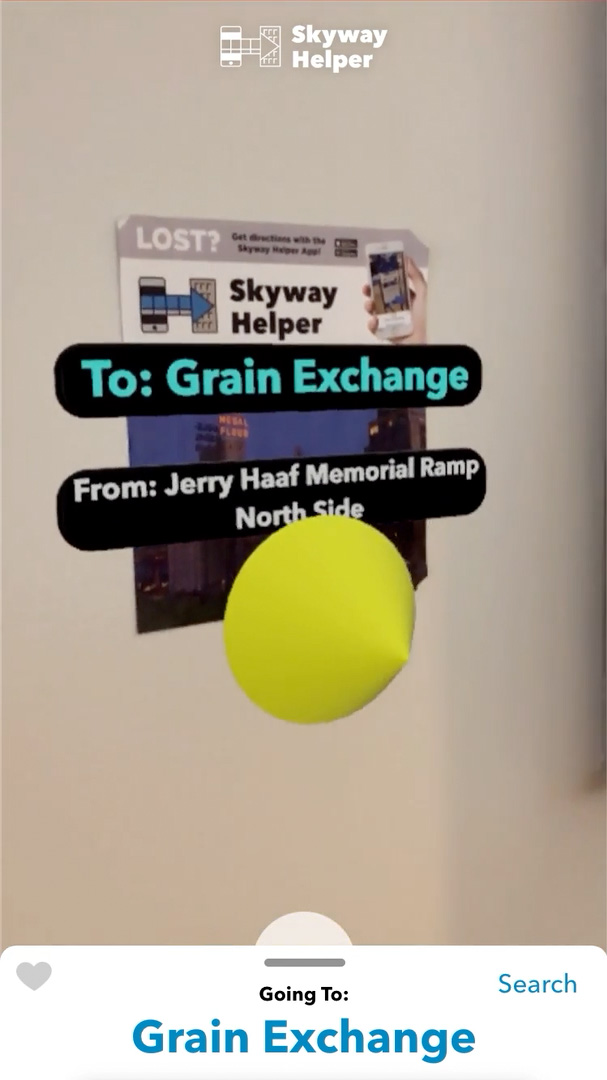

To activate the Augmented Reality (AR) experience, the app user points their smartphone camera at signage located throughout the Skyway system.

Each sign’s content consists of branding and app marketing, current location and a unique image. Each sign’s unique image is tied to a specific location in the app’s digital model of the Skyway, allowing the software to pinpoint the user’s location in that digital model when the AR experience is activated.

The app would work with as little as one sign in one building, but would work best with hundreds of signs spread throughout the Skyway. This concept allows fragmented roll-out within the privately-owned system.

Solution Part 2: Native App

A. Low-Fidelity Prototype

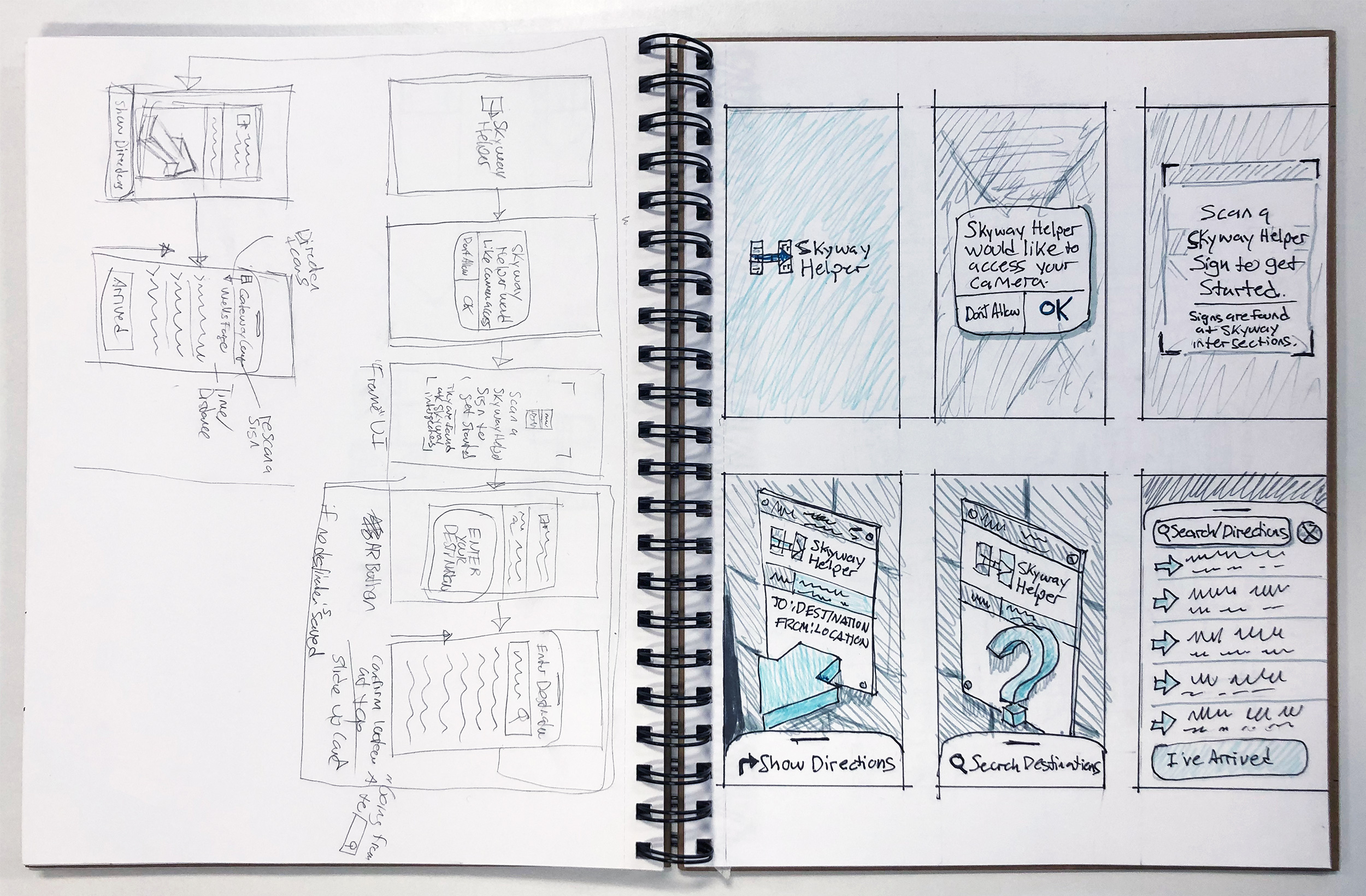

Hand-sketched screen flows were executed as a starting point for testing the hypothesis that AR could be used as an effective wayfinding tool for Skyway users.

The sketched screens were moved into Marvel POP for informal user testing. After testing the sketched prototype, it was determined that, because of the cutting-edge nature of AR, a high-fidelity prototype would need to be developed to ascertain users’ reactions to the concept.

B. High-Fidelity Prototypes

Adobe XD, Apple Reality Composer and Protopie were selected as the most suitable high-fidelity prototyping tools. UI components and a clickable static prototype were created in XD for testing. A separate AR experience was created in Apple Reality Composer for testing. Several rounds of design iterations were tested with users and refined.

A final prototype was created in Protopie for testing integration with smartphone tilt sensors that would show the directions card when the phone was tilted down at the floor (walking), and activate the camera when the phone was tilted vertically (AR experience).

C. Presentation Prototype

To best convey the overall app experience, a final presentation prototype was created by screen video capturing the AR experience and combining it with the app UI in Protopie. A video was then captured of a scripted scenario app test, shown below.

Version 2.0 and Beyond

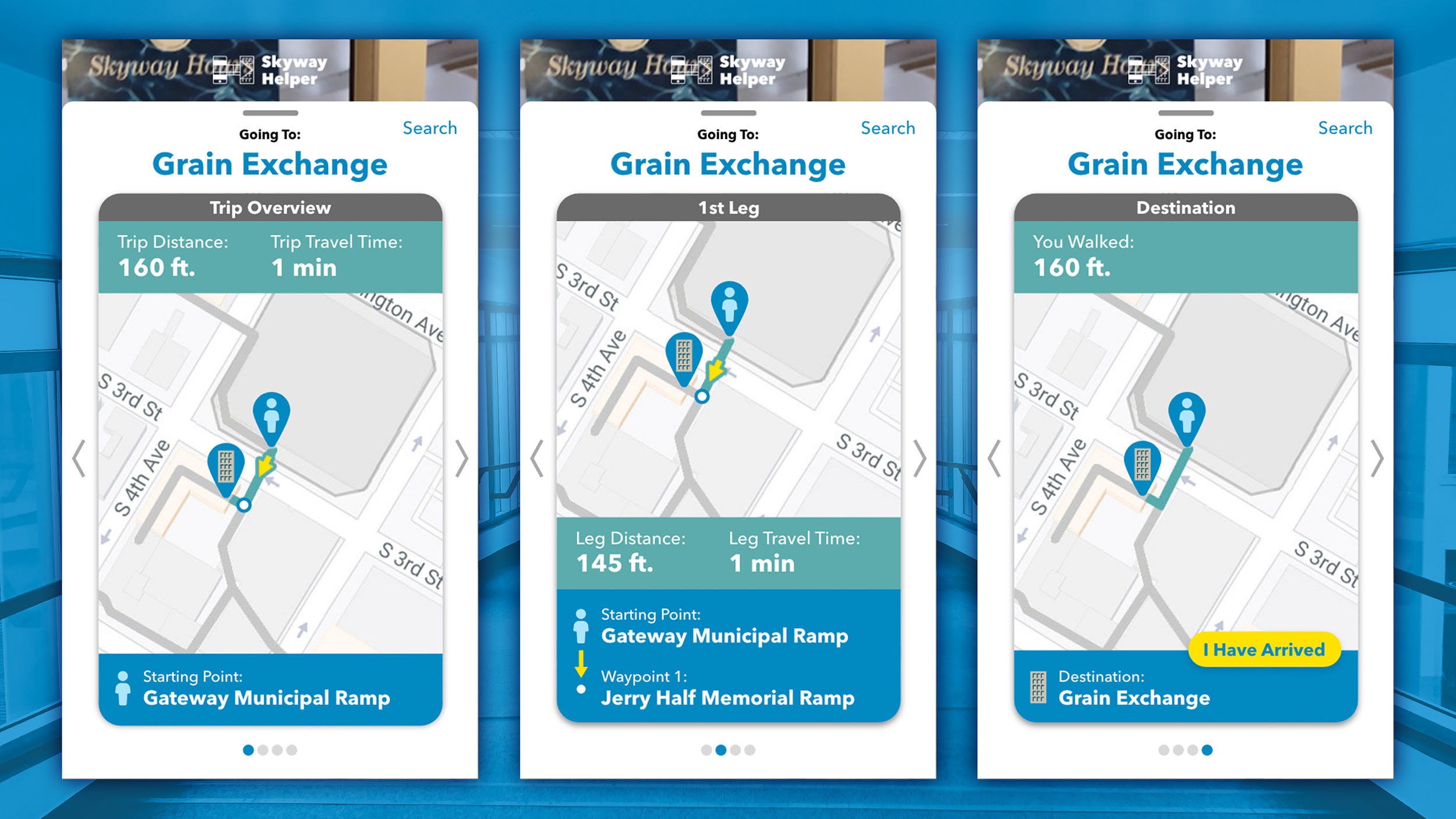

A. Map Views

Future app states could include map views of the overall journey, separate legs and completion. This could be implemented now, since the position of the scanned sign is known. If indoor positioning systems (like indoor GPS) become common, more app features are possible, like showing the user’s current position on the map.

B. Wearables Integration

Indoor positioning would also allow the creation of wearables integration, improving accessibility and providing turn-by-turn directions to earphones or smart watches. It would even be possible to call out landmarks verbally, for people that navigate in that way.

Appendix – Daily UI Challenge

For a Daily UI Challenge, I created a signup page for the app, so users can save their favorite spots in the Skyway. I used iOS patterns to create a seamless sign-up user flow.